YouTube videos provide low-quality educational content about rotator cuff disease

Article information

Abstract

Background

YouTube has become a popular source of healthcare information in orthopedic surgery. Although quality-based studies of YouTube content have been performed for information concerning many orthopedic pathologies, the quality and accuracy of information on the rotator cuff have yet to be evaluated. The purpose of the current study was to evaluate the reliability and educational content of YouTube videos concerning the rotator cuff.

Methods

YouTube was queried for the term “rotator cuff.” The first 50 videos from this search were evaluated. Video reliability was assessed using the Journal of the American Medical Association (JAMA) benchmark criteria (range, 0–5). Educational content was assessed using the global quality score (GQS; range, 0–4) and the rotator cuff-specific score (RCSS; range, 0–22).

Results

The mean number of views was 317,500.7±538,585.3. The mean JAMA, GQS, and RCSS scores were 2.7±2.0, 3.7±1.0, and 5.6±3.6, respectively. Non-surgical intervention content was independently associated with a lower GQS (β=–2.19, p=0.019). Disease-specific video content (β=4.01, p=0.045) was the only independent predictor of RCSS.

Conclusions

The overall quality and educational content of YouTube videos concerned with the rotator cuff were low. Physicians should caution patients in using such videos as resources for decision-making and should counsel them appropriately.

INTRODUCTION

The internet is an increasingly accessed database of information; it has been estimated that 56% of the total world population uses the internet today, compared to only 5% in 2000 [1]. YouTube is considered one of the most popular sources among internet sites with more than 1.9 billion users each month and one billion hours of video watched each day [2]. YouTube videos allow for visual learning of specific content, many of which concern orthopedic information applicable to patient education [3]. Furthermore, it has been demonstrated that decisions made by 75% of patients concerning treatment for their diseases were influenced by the knowledge acquired through online health information searches [4]; therefore, it is essential that these videos provide accurate and reliable information.

Information regarding some of the most prevalent orthopedic conditions encountered in practice, such as anterior cruciate ligament injuries, is often accessed by patients on YouTube to gain a better understanding of their condition despite these videos’ low quality [5]. Other studies have also reported the low-quality educational content and reliability of videos concerning various orthopedic conditions [3,5-8]. As YouTube lacks a formal video regulation process for promoting accurate information, it is possible that YouTube videos concerning many other unexplored orthopedic topics are also of low quality [9]. There is growing concern that the educational information on mainstream websites such as YouTube contains a high proportion of uninformed or deliberately deceptive opinions [10].

Among musculoskeletal complaints that present to primary care offices, shoulder pain is the second most common and is observed in 51% of patients [11]. Therefore, the prevalence of rotator cuff tears has been reported to be as high as 62% in some populations [12]. As orthopedic injuries are one of the leading healthcare areas that are searched for on the internet [13], YouTube has a repository of over 177,000 videos regarding rotator cuff disease [2]; however, the quality and reliability of the information contained in rotator cuff videos on YouTube is unknown. The purpose of the current study was to evaluate the reliability and educational content of YouTube videos concerning the rotator cuff. The authors hypothesized that these videos would have relatively low-quality educational content and poor reliability when these metrics were assessed by outcome tools specific to evaluating online videos.

METHODS

YouTube Search

The current study was exempt from Institutional Review Board approval. The YouTube online library (https://www.youtube.com) was queried using the keyword “rotator cuff” on May 4, 2020. In accordance with previous YouTube-based studies in the orthopedic literature [14,15], the first 50 videos sorted by relevance based on this keyword were recorded for evaluation. This search strategy provides an accurate representation of what users will view when searching for the term “rotator cuff” using the default search setting and is the most commonly employed search strategy in health informatics studies of YouTube content [4], which has been reported to be a feasible method of video selection in the literature [3]. If a video populated from the initial search was made in a non-English language or was an audio soundtrack, it was excluded, and the next consecutive video was used instead.

Extracted Video Characteristics

Each video had the following variables recorded for the final analysis: (1) title; (2) video duration; (3) number of views; (4) video source/uploader; (5) type of content; (6) days since upload; (7) view ratio [views/days]; (8) number of likes; (9) number of dislikes; (10) like ratio [like×100/[like+dislike]; and (11) the video power index [VPI]. The VPI is a calculation derived from the following formula: like ratio×view ratio/100. This measurement is an index of video popularity based on the number of views and likes, which has been used in previous studies [3]. Higher values are indicative of greater video popularity, and there is no upper limit to this metric. The mean VPI for orthopedic videos has ranged from 92.6–301.9 in previous studies [3,6,15].

Video Upload Sources

Video sources/uploaders were categorized by the following: (1) academic (pertaining to authors/uploaders affiliated with research groups or universities/colleges), (2) physicians (independent physicians or physician groups without research or university/college affiliations), (3) non-physicians (health professionals other than licensed medical doctors), (4) trainers, (5) medical sources (content or animations from health websites), (6) patients, and (7) commercial sources.

Video Content Categories

Content was categorized as one of the following: (1) exercise training, (2) disease-specific information, (3) patient experience, (4) surgical technique or approach, (5) non-surgical management, and (6) advertisement.

The Assessment of Video Reliability and Educational Content

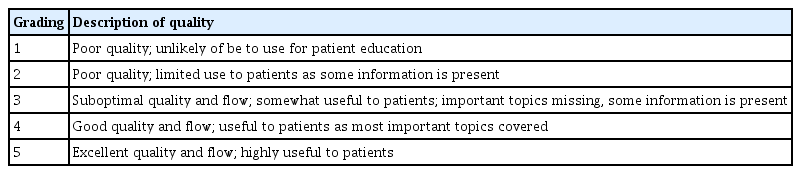

The Journal of the American Medical Association (JAMA) benchmark criteria was used to assess video accuracy and reliability [16]. The JAMA benchmark criteria (Table 1) offer a non-specific and objective tool consisting of four individual criteria that are identifiable in online videos and resources. To use this tool, an observer assigns one point for each criterion present in a video. A score of four indicates higher source accuracy and reliability, whereas a score of zero indicates poor source accuracy and reliability.

Non-specific educational content quality was assessed using the global quality score (GQS). The GQS [3,17] evaluates the educational value of online content using five criteria (Table 2). One point is assigned for each of the five identifiable criteria present in a video. The GQS has a maximum score of 5, which indicates high educational quality.

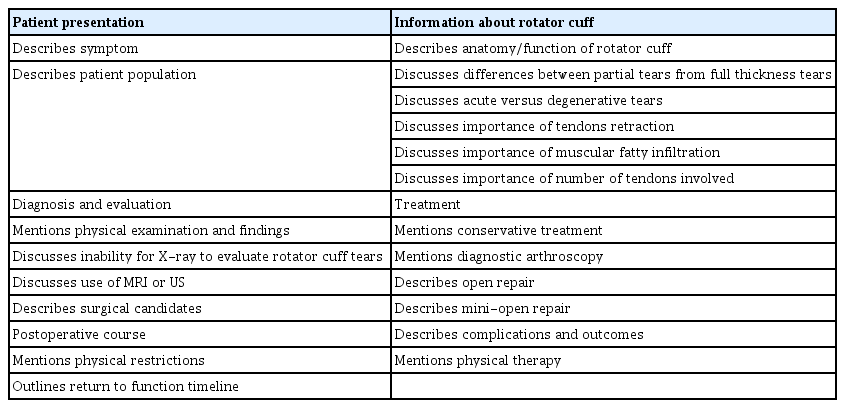

To specifically evaluate the quality of educational content for information on the rotator cuff, we created the rotator cuff-specific score (RCSS), which is composed of 20 items based on the guidelines published by the American Academy of Orthopedic Surgeons [18]. The use of novel orthopedic topic-based instruments to assess the educational quality of online video has been demonstrated in previous literature [19]. The RCSS specifically evaluates information on (1) common patient presentations and symptoms, (2) anatomy of the rotator cuff, (3) diagnosis and evaluation of rotator cuff pathologies, (4) treatment options, and (5) the postoperative course and expectations (Table 3). One point is assigned for each present item and may confer a maximum possible score of 22, with a higher score indicating better rotator cuff-specific educational quality. One author scored all studies. A subset of 10 videos was selected for each of the three reliability and quality scores (total, 30 videos) to be analyzed again by a separate author to determine inter-observer reliability. Inter-observer reliability was 0.98 (0.96–0.99) for the JAMA score, 0.97 (0.96–0.98) for the QGS score, and 0.9 (0.88–0.93) for the RCSS.

Statistical Analysis

All statistical tests were performed with Stata ver. 15.1 (StataCorp., College Station, TX, USA). Descriptive statistics were used to quantify the video characteristics as well as the video reliability and quality scores. Continuous variables were presented as means with standard deviations and ranges. Categorical variables were shown as relative frequencies with percentages. One-way analysis of variance (ANOVA) tests (for normally distributed data) and Kruskal-Wallis tests (for non-normally distributed data) were used to determine whether the video reliability and quality differed based on (1) video source and (2) video content. Multivariate linear regression analyses were used to determine the influence of specific video characteristics on video reliability (JAMA score) and educational quality (GQS and RCSS). A two-tailed p-value of <0.05 was considered to indicate statistical significance.

RESULTS

All of the first 50 videos populated by the initial query were included in the final analysis. The video duration ranged between 1 and 23.5 minutes with a mean±standard deviation video duration of 6.9±4.8 minutes. The mean number of views was 317,500.7±538,585.3, and collectively, the 50 videos were viewed 15,875,035 times. The mean VPI was 296.98±435.3. Other video characteristics are listed in Table 4. Most video uploaders were physicians (46%), while academic institutions accounted for the lowest relative frequency of video uploads at 4%. The video content was primarily classified as being disease-specific (48%), while exercise training content accounted for the lowest proportion of video content at 2%.

Video Reliability and Educational Content Analysis

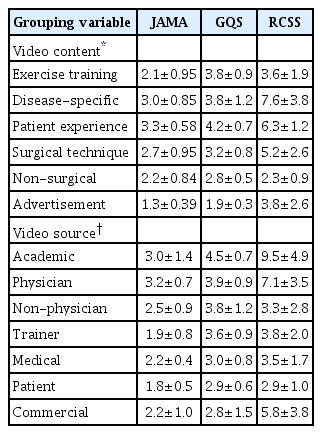

The mean JAMA score was 2.66±0.96; the mean GQS was 3.68±1.04, and the mean RCSS was 5.64±3.56. An ANOVA was used to determine whether the video reliability and the quality of educational content differed by upload source and by content classification (Table 5). Significant between-group effects were observed for the JAMA score based on the content category (p=0.018), with videos concerning patient experiences having the highest mean JAMA score. Significant between-group effects were also observed for the JAMA score based on the video upload source (p=0.007), with videos uploaded by physicians receiving the highest mean JAMA score. Between-group effects were also observed for the GQS, with exercise training and disease-specific content (p=0.038) conferring higher mean scores. There was no association between the upload source and the mean GQS scores (p=0.165). Statistically significant group effects were found for the RCSS with disease-specific content (p=0.001) and academic institution upload sources (p=0.011) having the highest mean RCSS.

Predictors of Video Reliability and Educational Content Quality

The influence of video characteristics, the video content category, and the video upload source on the JAMA score, GQS, and RCSS was investigated using multivariate linear regression models. These models did not identify any independent associations between the video characteristics, content category, or upload source and the JAMA score (all p>0.05). For the GQS, non-surgical intervention content was independently associated with lower GQS scores (β=–2.19, p=0.019). Disease-specific video content (β=4.01, p=0.045) was the only independent predictor of the RCSS.

DISCUSSION

The main findings of the current study were that (1) the first 50 YouTube videos alone populated by the key word “rotator cuff” accrued a total of 15,875,035 views by users; (2) the mean JAMA score and RCSS of all videos were 2.7 and 5.6, respectively, suggesting low video reliability and rotator-cuff specific educational content quality; (3) the video reliability and educational value as measured by the JAMA score, GQS, and RCSS differed based on the video upload source and the type of video content; and (4) disease-specific video content was a significant independent predictor of a higher RCSS.

The current study suggests that the rotator cuff is of interest to a large online audience, as an analysis of the first 50 videos queried by the simple search term “rotator cuff” were viewed a total of 15,875,035 times. On average, this means that these 50 videos are viewed 297.50 times per day. This finding is unsurprising because YouTube has become a highly utilized source for gathering health information [20]. Interestingly, the mean number of likes of all videos was 3908.27, while the mean number of dislikes for all videos was only 98.42. Furthermore, the mean VPI (a measure of video popularity) was 296.98, reaffirming that videos concerning the rotator cuff are both highly liked and frequently viewed. This value was high in comparison to other common orthopedic conditions, as previously reported YouTube VPIs include values of 92.6 for disc herniation [6], 174.4 for kyphosis [3], and 301.9 for meniscectomy [15]. Despite the popularity of rotator cuff videos, the mean JAMA score was 2.66±0.96, the mean GQS was 3.68±1.04, and the mean RCSS was 5.64±3.56, suggesting poor video reliability, accuracy, and rotator cuff-specific educational content. Taken together, these findings imply that many viewers are satisfied with videos that provide them with unreliable and low-quality information, which may misinform both their motivation to seek treatment and their expectations for outcomes.

Although most videos were uploaded by physicians and the majority of their content was classified as concerning disease information, the reliability, accuracy, and rotator cuff-specific educational content was low. This information is in accordance with previous studies, which have sought to evaluate the quality and content of orthopedic topics on YouTube. Indeed, other orthopedic-specific YouTube studies concerning kyphosis [3], disc herniation [6], the anterior cruciate ligament [5], lumbar discectomy [21], and femoroacetabular impingement [22] syndrome have all concluded that the quality and reliability of YouTube videos discussing these topics are strikingly low. Given that YouTube lacks an editorial process for videos uploaded to their website and that any user can upload any video of their choice, it is plausible that this lack of restrictions allows for video content to be posted that is inaccurate. These findings highlight the need for higher quality orthopedic-based educational content for viewers and patients on YouTube or for the development of a new online platform that only allows peer-reviewed content.

Interestingly, the ANOVA in the current study demonstrated that the mean JAMA score (a measure of video reliability and quality) was higher for videos that discussed patient experiences as well as videos that were uploaded by a physician. This finding may suggest that patient testimonies of their experiences regarding the treatment of rotator cuff pathology are tangible to other viewers, and that physicians who treat these pathologies provide more reliable information. Furthermore, the mean GQS (an objective measure of educational quality) was higher in videos where the content was based on exercise training and disease information; the mean RCSS was higher in videos concerning disease information and those uploaded by academic institutions, and disease-specific video content was a significant independent predictor of a higher RCSS. As both of these measures are concerned with the objective and specific educational content quality, respectively, it is therefore plausible that information about rotator cuff disease provides the best educational quality for viewers and patients. Furthermore, academic institutions that treat or study the rotator cuff would also be expected to produce higher educational value in their videos. Although these associations exist statistically, it is still important to recognize that overall, the quality and reliability of the videos evaluated in the current study were low and that future efforts should be made to increase the quality of such videos. In particular, treating healthcare providers may play a larger role in identifying and counseling patients on which resources are high-quality. Given that there is clearly a demand for online video content as an educational resource, healthcare providers should make efforts to produce high-quality videos for patient education. As more orthopedic surgeons embrace social media for physician-patient engagement and marketing [23], consideration should be given to utilizing these platforms to offer accurate alternatives to unregulated resources, such as YouTube.

The current study had several limitations. The assessment of a small subset of YouTube videos among the many populated with the query “rotator cuff” may not provide a complete representation of all the available videos on this topic. However, selection bias was minimized by systematically analyzing the first 50 videos, and it has been reported that the majority of internet users confine their searches to the first two pages populated by a search [24], which is consistent with the methods employed here. The current study also used reliability and quality assessment tools, which have not been validated despite their widespread use in studies that seek to evaluate these measures for online resources. As these tools have repeatedly demonstrated excellent inter-observer reliability for all three tools in the literature and in the current study, it is likely that the low-quality findings among the included videos are an accurate assessment.

The overall quality and educational content of YouTube videos concerned with the rotator cuff were low. Physicians should caution patients about using such videos as resources for decision-making and should counsel them appropriately.

Notes

Financial support

None.

Conflict of interest

Kyle N. Kunze serves on the editorial board of Arthroscopy Journal. Jorge Chahla is an unpaid consultant for Arthrex, Inc., CONDMED Linvatec, Ossur, and Smith and Nephew and serves on the board of the American Orthopedic Society for Sports Medicine, the Arthroscopy Association of North America, and the International Society of Arthroscopy, Knee Surgery, and Orthopedic Sports Medicine. Nikhil N. Verma is a member of the American Orthopedic Society for Sports Medicine, American Shoulder and Elbow Surgeons, and the Arthroscopy Association of North America; is on the governing board of Knee and SLACK incorporated; receives research support from Arthrex, Inc., Breg, Ossur, Smith, and Nephew, and Wright Medical Technology, Inc.; receives royalties from Arthroscopy and Vindico Medical-Orthopedics Hyperguide; receives stock options from Cymedica and Omeros; and is a paid consultant for Orthospace and Minivasive. Adam B. Yanke receives research support from Arthrex, Inc., CONMED Linvatec, Olympus, Organogenesis, Vericel; receives stock options from and is an unpaid consultant for Patient IQ; is an unpaid consultant for Sparta Biomedical; and is a paid consultant for JRF Ortho. No other potential conflicts of interest relevant to this article were reported.