Rotator cuff repair: what questions are patients asking online and where are they getting their answers?

Article information

Abstract

Background

This study analyzed questions searched by rotator cuff patients and determined types and quality of websites providing information.

Methods

Three strings related to rotator cuff repair were explored by Google Search. Result pages were collected under the “People also ask” function for frequent questions and associated webpages. Questions were categorized using Rothwell classification and topical subcategorization. Webpages were evaluated by Journal of the American Medical Association (JAMA) benchmark criteria for source quality.

Results

One hundred twenty “People also ask” questions were collected with associated webpages. Using the Rothwell classification of questions, queries were organized into fact (41.7%), value (31.7%), and policy (26.7%). The most common webpage categories were academic (28.3%) and medical practice (27.5%). The most common question subcategories were timeline of recovery (21.7%), indications/management (21.7%), and pain (18.3%). Average JAMA score for all 120 webpages was 1.50. Journal articles had the highest average JAMA score (3.77), while commercial websites had the lowest JAMA score (0.91). The most commonly suggested question for rotator cuff repair/surgery was, “Is rotator cuff surgery worth having?,” while the most commonly suggested question for rotator cuff repair pain was, “What happens if a rotator cuff is not repaired?”

Conclusions

The most commonly asked questions pertaining to rotator cuff repair evaluate management options and relate to timeline of recovery and pain management. Most information is provided by medical practice, academic, and medical information websites, which have highly variable reliability. By understanding questions their patients search online, surgeons can tailor preoperative education to patient concerns and improve postoperative outcomes.

Level of evidence

IV.

INTRODUCTION

The internet is the easiest source for patients to find quick answers to their healthcare-related questions [1,2]. Approximately 50% of orthopedic patients use the internet to investigate their conditions, while 30% of these internet users bring questions to their surgeons based on online information [3]. Search engines such as Google and Yahoo account for an estimated 30% of global web traffic [4] and are commonly used for information prior to seeing a physician or following an appointment. Burrus et al. [5] found that a group of 1,296 patients surveyed at an orthopedic clinic were more likely to research their orthopedic problems on the internet than on the websites of the treating institutions. While adequate preoperative education promotes informed decision-making [6-10], internet utilization as a primary resource for healthcare information presents two major problems: accuracy of information and patient comprehension. Although the internet is a powerful tool when utilized properly, the quality of healthcare information on the internet is highly variable, with web searches yielding information that can be difficult to comprehend, of poor quality, misleading, or even false [11,12].

Google dominates the online search engine sphere, accounting for 91.9% of search engine market share worldwide and 87.6% of the share in the United States [13,14]. Leveraging the Google Search algorithm to better understand orthopedic search trends by patients can help inform personalized orthopedic care. When a patient conducts a search on Google, the first page of results generally contains 8–10 of the most relevant items. Google Search results also include a “People also ask” section that utilizes machine learning to provide additional questions that patients may have based on data gathered from the searches of other internet users. Each “People also ask” question is followed by a brief section of text attempting to answer the question, together with a hyperlink to the webpage from which the information originated. The information returned in this section is not academically verified or vetted for quality.

Rotator cuff repair is among the most common orthopedic procedures in the United States, with more than 460,000 surgeries performed annually [15]. Despite the ubiquity of rotator cuff repair, patients are typically unfamiliar with the specific details of surgery and the recovery process. Patients in such instances might consult internet search engines for quick answers regarding surgery. It is important to be aware of the types of questions and resources that users search online regarding rotator cuff surgery in order to alert surgeons to patient knowledge gaps and the quality of the information available.

Therefore, the purpose of this study is to investigate the questions rotator cuff repair patients search online and to determine the type and quality of webpages provided to patients from top results to each query based on the “People also ask” algorithm.

METHODS

No Institutional Review Board approval or informed consent was required for this study.

The methods of our study were adapted from previous research by Shen et al. [16] Search queries were performed independently by two authors (AJH and KDC) on Google Search on August 18 and August 19, 2021, using the following strings: “rotator cuff repair pain,” “rotator cuff repair,” and “rotator cuff surgery.” results from the “rotator cuff repair” and “rotator cuff surgery” searches were combined to encompass the average patient search online. To avoid bias of personalized search results influenced by prior search history, searches were prospectively conducted on a newly installed Google Chrome application (Google Inc.) with no prior queries. Any previously installed Google Chrome application was uninstalled, and hard drives were subsequently searched for any remaining files containing Google Chrome data, which were deleted if encountered.

For each search query, the “People also ask” tab was expanded until approximately 100 suggested searches appeared on the page, following prior studies that include between 50 and 150 websites [16,17]. Each “People also ask” question was paired with a single hyperlink to a webpage. Suggested questions and associated webpage hyperlinks were manually collected into a data sheet using the automated Google Chrome extension Scraper ver. 1.7 (Google Inc.). Questions clearly unrelated to the topic of rotator cuff repair were excluded from the dataset. The final dataset consisted of the remaining “People also ask” questions from each search string that pertained to rotator cuff repair.

Each resultant question was categorized using the Rothwell classification into one of three themes—fact, policy, or value [18,19]. For this study, questions were subcategorized based on content into one of the following categories: specific activities, timeline of recovery, restrictions, technical details, cost, indications/management, risks/complications, pain, longevity, and evaluation of surgery. Further descriptions and examples of Rothwell classification can be found in Table 1.

Each website hyperlink was visited, and the website source was categorized as either academic, commercial, government, journal, legal, medical information site, medical practice, non-medical media site, or single-surgeon personal [16,20]. A description and example of each website classification can be found in Supplementary Table 1.

Each website was scored for information quality on a four-point scale according to the Journal of the American Medical Association (JAMA) benchmark criteria, which include points for authorship, attribution, currency, and disclosure [16,21]. Descriptions of the requirements to receive a point for each criterion can be found in Table 2. The question classification, website classification, and JAMA benchmark score were compiled independently by two authors (AJH and KDC) after agreement was established for categorical definitions. Discrepancies were reviewed by a third author (JRM) as a tiebreaker to decide final categorization.

Cohen’s kappa coefficient was used to evaluate interobserver reliability of question classification and website classification. Pearson’s chi-square test and Student t-test were used to evaluate the results for significance. Statistical significance was set to P-values <0.05.

RESULTS

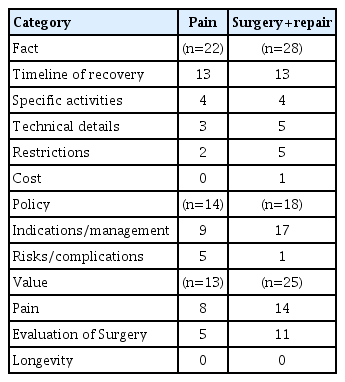

One hundred twenty questions were were extracted between the two search strings: (1) 71 questions from the “rotator cuff surgery” combined with “rotator cuff repair” search strings and (2) 49 questions from “rotator cuff repair pain.” The most common questions by Rothwell classification for both queries were designated as “fact” questions, 41.7% total with 39.4% and 44.9% respectively. Policy questions represented 26.7% total, 25.4% and 28.6% respectively. Value questions represented 31.7% total, 35.2% and 26.5%. When combining all search terms, the most common subcategories were Timeline of recovery (21.7%), indications/management (21.7%) and pain (18.3%). For “rotator cuff surgery” combined with “rotator cuff repair”, the most common question subcategories were indications/management (23.9%), pain (19.7%), and timeline of recovery (18.3%). For “rotator cuff repair pain”, the most common question subcategories were “timeline of recovery (26.5%), indications/management (18.4%), and pain (16.3%) (Table 3).

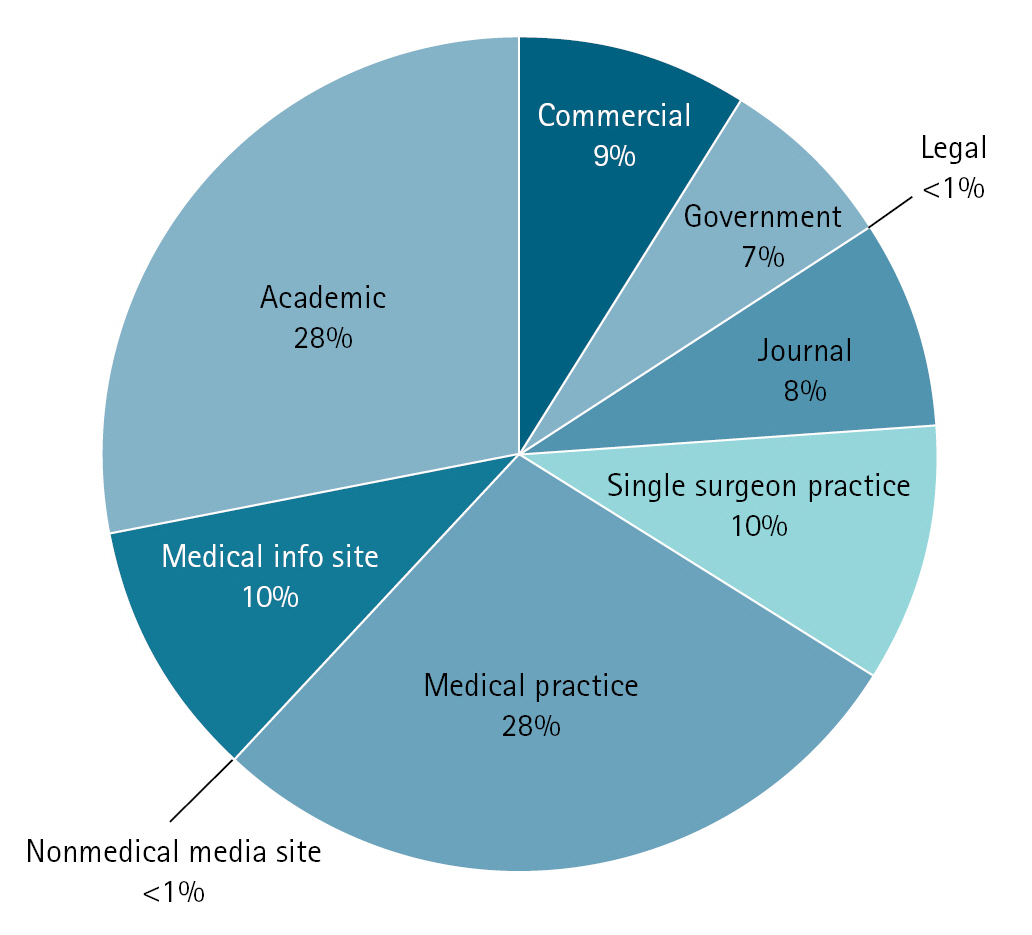

For combined search strings of “rotator cuff surgery” and “rotator cuff repair,” the most common types of webpages were academic (28.2%), medical practice (26.8%), and medical info sites (14.1%). For “rotator cuff repair pain,” the most common types of webpages were academic (28.6%), medical practice (28.6%), and commercial (12.2%) Webpage distribution is shown in Table 4. Combined data for the two query groups are presented in Fig. 1.

The mean JAMA score for all 120 webpages was 1.50. The websites with the highest mean JAMA scores were journal websites (mean, 3.77). The websites with the lowest mean JAMA scores were commercial websites (mean, 0.91) and medical practice websites (mean, 0.98). Means for the remaining categories were as follows: academic=1.03, government=2.44, medical information sites=2.75, and single-surgeon practice=1.08. Cohen’s kappa coefficient for interrater reliability showed near-perfect strength of agreement (0.83) for question categorization and (0.96) for website categorization.

DISCUSSION

The key findings of this study are (1) the most commonly searched question for rotator cuff repair/surgery is, “Is rotator cuff surgery worth undergoing?”. The most commonly searched question for rotator cuff repair pain is, “What happens if a rotator cuff is not repaired?”. (2) The most common Rothwell classification is questions pertaining to facts (38.5% overall). (3) The most common subcategory of questions in rotator cuff repair/surgery searches is indications/management (23.9%), while the most common subcategory of questions for rotator cuff repair pain is timeline of recovery (26.5%). (4) Overall, the most frequently encountered websites were academic (28.3%) and medical practice sites (27.5%), and (5) most webpages scored poorly on JAMA benchmark criteria, with commercial websites scoring the worst.

Most questions obtained in this analysis were fact-based (38.5%), including questions about the veracity of information. This suggests that patients are searching for objective information regarding rotator cuff surgery or pain. Three of the most popular “fact” subcategories (specific activities, timeline of recovery, and restrictions) reveal that patients are concerned with duration and type of limitations resulting from surgery. Many of the questions were phrased as, “What happens at X weeks after surgery?” or “Is XYZ activity good to perform/allowed after surgery?” The most frequently encountered question category in the “rotator cuff repair pain” search was timeline of recovery (26.5%). This illustrates the importance of clear and time-based expectations to patients for postoperative pain, as well as preferred multi-modal analgesics given the abundance of medications and nerve blocks available [22]. Rotator cuff surgery recovery requires shoulder immobilization in a sling for several weeks; however, range of motion, physical therapy, and graduated strengthening are paramount to long-term success [23]. High heterogeneity in rotator cuff repair rehabilitation protocols has been previously reported [24]. It would benefit surgeons to provide patients with details about their specific protocols to avoid them finding alternative protocols online. Multiple studies have reported that appropriately aligned expectations regarding functional outcomes are correlated with improved postoperative outcomes following rotator cuff repair [25-27].

Unsurprisingly, the most asked question category for "rotator cuff repair/surgery" searches pertained to indications/management (23.9%). Patients search various questions including, “What happens if a torn rotator cuff goes untreated?” or “Can a rotator cuff heal on its own?” to determine the necessity of surgery. Options for management of partial and full-thickness rotator cuff tears, including when to refer a patient for repair, are evolving and differ from surgeon to surgeon [28]. This highlights the importance of clearly outlining indications for rotator cuff repair as a part of preoperative counseling. If management plans (together with alternative options) are not thoroughly explained in clinic, patients may search online for answers. This important discussion with patients helps build rapport and addresses the most frequently asked questions, “Is rotator cuff surgery worth undergoing?” and “What happens if a rotator cuff is not repaired?” For patients to understand and accept the answers to these clinical questions, they must possess a basic understanding of the risks, benefits, and alternatives available to them. If time constraints allow physicians to answer only a few questions during preoperative consultation, we recommend the two questions above as a standard of care to cover patients’ most fundamental concerns.

Of the 120 searches, the most frequently linked webpages were academic and medical practice pages (28.3% and 27.5%, respectively). However, these two categories were rated poorly according to JAMA benchmark criteria, scoring 1.03 and 0.99, respectively. These informational webpages rarely revealed their authors, references, or relevant disclosures. Previous research finds that 52% of users of internet health sites think that “almost all” or “most” of the information found on pertinent websites is credible [29]. Orthopedic surgeons currently face two scenarios in consultations with patients [30]. Prior to a visit, a patient may utilize the internet to self-diagnose with inaccurate information, which then requires the physician to correct the patient. Following a visit, patients may search the internet and return with information that confuses them or that potentially contradicts the physician’s opinion. If information known by experts to be faulty is deemed credible by patients, an awkward conversation ensues wherein surgeons must spend time correcting patients’ online research. While cumbersome, these exchanges are imperative insofar as preoperative education has been shown to improve postoperative clinical outcomes [6-10]. Cassidy and Baker [17] reported on 38 peer-reviewed articles analyzing the quality or readability of online orthopedic information. Many of the articles comment either on the poor quality of information currently available to patients or that high-quality sources are not available for appropriate public comprehension. Given the high prevalence of internet searches for healthcare-related topics, together with patients’ high levels of trust in these sites, there is a need for improvement in informational publishing practices from orthopedic sources. As contributors to many of these academic and medical practice websites, part of the burden to improve scientific rigor on these sites falls to orthopedic surgeons and other practitioners involved in patient education.

Because there is not currently a standardized instrument to assess the informational quality of a given healthcare website, JAMA benchmark criteria remain a tool to reflect scientific transparency for patients. The average JAMA score in this study was 1.5 of 4.0, reflecting the overall poor quality of online medical information. Interestingly, commercial, for-profit websites, which were the third most retrieved webpages when searching for rotator cuff repair pain (12.2%), had the worst JAMA score (0.91). This is not surprising given the vested interest of companies in their promotion of medications or implants. Providers must remain informed about the companies providing medical information to patients and remain wary of any potential conflicts of interest they propagate. The highest scores among sites belonged to scientific journals (3.8), which were nearly universally shown to highlight authorship, references, currency, and disclosures. One caveat of these sources is opacity in their readability and comprehension for non-healthcare professionals. It is important for surgeons to be aware of the most up-to-date literature in order to condense and share this knowledge with patients in an understandable way. Prior studies have examined the informational quality of orthopedic information on the internet and similarly concluded that patients should exercise caution when searching for medical information in deference to sources recommended by surgeons [17,20,21].

The major limitation of this study is its utilization of Google Search. The Google “People also ask” function uses proprietary machine learning to anticipate what questions a user may ask next based on previously accumulated data from web users. Results provided by the algorithm related to rotator cuff surgery will vary depending on a given individual’s search history. We mitigated this variability by including a large sample size of questions, as well as performing all searches on a cleanly-installed web browser with no previously conducted searches. Similarly, it is impossible to confirm that patients with rotator cuff pathology are those generating the algorithm for the Google “People also ask” function, which renders the starting point of this study an inherent assumption. This corresponds with a further advantage of this study as patients herein are given complete anonymity when posing their searches online, unlike with clinician-administered surveys. The JAMA benchmark criteria are an imperfect measure for the quality of website content, primarily intended as a proxy for transparency and publishing practices, as pointed out by Shen et al. [16] Future studies should examine the differences between questions that patients search on the internet and questions they ask their surgeons in-person.

The most common queries in Google Search pertaining to rotator cuff repair are questions that evaluate management options, as well as questions related to the timeline of recovery and pain management. Most of the information is provided by medical practice, academic, and medical information websites, which have highly variable reliability. By understanding the questions that patients are asking online, surgeons can tailor preoperative education to common patient concerns and improve postoperative outcomes.

Notes

Author contributions

Conceptualization: AJH, JRM, GEG. Data curation: AJH, KDC. Formal Analysis: AJH, KDC. Investigation: JRM, KDC. Methodology: AJH, JRM, DD, MRC, NNV, GN. Supervision: DD, MRC, NNV, GN, GEG. Writing – original draft: AJH. Writing – review & editing: AJH, JRM, DD, MRC, NNV, GN, GEG.

Conflict of interest

None.

Funding

None.

Data availability

Contact the corresponding author for data availability.

Acknowledgments

None.

SUPPLEMENTARY MATERIALS

Supplementary materials can be found via https://doi.org/10.5397/cise.2022.01235.

Description and examples of each type of website classification